Fundraising campaign appeal tracking is an art - but not hard to master. More than just calculating how many people responded to direct mail, it’s a constant series of tests that, over time, allow you to improve the results of your fundraising appeals and increase your return-on-investment (ROI). The Target Analytics’ professional services consulting team often recommends annual fund appeal tests to customers because they enable you to determine which marketing pieces resonate more with your target market, rather than merely relying on guesswork. Called an A/B-split test, it should be a vital component of your annual fund, grateful patient or other mass-market solicitation mailing activities. What a great thing!

A/B-split testing occurs when mail pieces are separated into two distinct versions, with only one element different between them.

You only need 5 things to conduct a test:

- A constituent group for the test

- Defined test element

- An appeal letter and reply method

- Coding in your CRM

- A calculator

A/B split testing is easier to conduct than you probably imagine. You simply create two versions of your mailer. They should be exactly the same, except for one critical element. Then, you send one version of your mailer to half of your mailing list, and the other half of your list receives the other version. You track the results so you can determine which mailer generates a greater response rate. In this way, you can analyze which mailer is the most effective.

Here’s how a split-test works.

- Determine what you want to test. For example: Which solicitation produces a higher average gift amount?

- Choice A: No suggested gift amount (donor chooses his/her gift amount)

- Choice B: Suggested ask amount string (gift amounts are suggested to the donor)

- Determine which constituent records will be used in the test. For example:

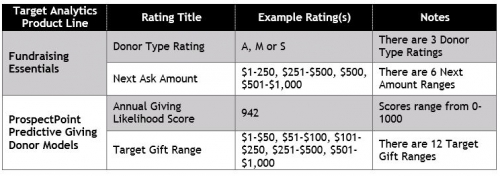

- Example 1 (Fundraising Essentials scores): Records with Donor Type Rating A and Next Ask Amount $1-$250 and with a gift of $1-$25 within the past 24 months

- Example 2 (ProspectPoint Modeling scores): Records with Annual Giving Likelihood scores between 501 and 1000 and Target Gift Range $1-$50 (TGR 1) and with a gift of $1-$25 within the past 24 months

- Query your database to gather the appropriate records from item 2 above.

- Randomly split the records into 2 groups - approximately 50% / 50% (though this does not have to be exact). You can do this with your CRM if you have a split function or by other criteria such as all A-M Last Names and all N-Z Last Names.

- You will have Group A and Group B

- For example Group A has 1,057 records and Group B has 956 records

- You will have Group A and Group B

- Code each record with an appeal code so that you know which record received which solicitation (Group A is mailed Letter A and Group B is mailed Letter B). Coding and barcode scanning may be available in your CRM or through your mail house vendor. If you do not have a coding system, here’s an easy one:

- Group A is coded for the Letter A appeal: YearMoDayAppealname-Package# (20161115AFTest1-A)

- Group B is coded differently for the Letter B appeal: (20161115AFTest1-B)

- Create/print your appeal letters. Since our example is testing a blank gift amount against a suggested string of gift amounts, the only difference in the letters will be the ask amount line or the line on the reply card. The language and layout of the letter should be as identical as possible. Here’s how the appeal language might be different from letter A to letter B:

- Letter A: ...your gift, in an amount of your choosing, goes to work immediately providing emergency food and shelter to abandoned animals in our community.

- Letter B: ...your gift of $30, $40 or $50 goes to work immediately providing emergency food and shelter to abandoned animals in our community.

- Note, you can also mimic the choice differences on a reply card you’ve inserted:

- Reply card A: Here is my gift of $_______________ (write in a gift amount of your choosing) to provide needed food and shelter to abandoned animals

- Reply card B: Here is a gift of $30, $40, $50 (circle one) to provide needed food and shelter to abandoned animals

- After responses have been received and entered into your CRM constituent records, analyze the results, report and determine next steps.

Reporting Your Results

Pull the data you need to report on your results. Here are some items you could review and report. Remember our test was to determine which produced a higher average gift amount, so that’s the primary piece of information we want to review.

- Response rate (renewal rate)

- Group A received 589 responses

- 589 responses divided by 1,057 records sent Letter A = 55.72% response rate

- Group B received 601 responses

- 601 responses divided by 956 records sent Letter B = 62.87% response rate

- Group A received 589 responses

- Average gift amount

- Group A produced total revenue of $11,520.84

- $11,520.84 divided by 589 responses = average gift of $19.56

- Group B produced total revenue of $20,788.59

- $20,788.59 divided by 601 responses = average gift of $34.59

- Group A produced total revenue of $11,520.84

- Lift in Revenue

- Group A provided total revenue of $11.520.84 and Group B provided total revenue of $20,788.59

- Lift in response is calculated: Lift = [Test (Group B) minus Control (Group A)] divided by Control (Group A) times 100

- ($20,788.59 - $11,520.84) / $11,520.84 x 100 = 80.44335

- Lift in response is calculated: Lift = [Test (Group B) minus Control (Group A)] divided by Control (Group A) times 100

- The ask-string test letter provided a lift in response of 80.44% over Group A!

- Group A provided total revenue of $11.520.84 and Group B provided total revenue of $20,788.59

Our example test had a significance of 99%!

Download my spreadsheet resource to make analyzing your split test marketing piece easier!

You can see how informative and useful A/B-split testing can be to your annual fund program. Over time, you can increase the number of tests you conduct annually, as well as begin to use more sophisticated segmentation. The Direct Marketing Association (DMA) is an excellent resource for courses and information for both commercial and nonprofit organizations on this topic and others. Check them out for membership and regional roundtables as well.

Leave a Comment