Sharing SKY Bridge - an Airbyte Connector for NXT

An open-source Airbyte connector that enables seamless data integration between Blackbaud NXT (via SKY API) and 50+ modern data platforms including Snowflake, BigQuery, PostgreSQL, and more.

Full details here:

This is a work in progress, and I welcome your feedback.

*important update/note: TL;DR: this connector is a preview and subject to change; if you're not comfortable with manual updates, wait until it's officially hosted by Airbyte before fully integrating into your production workflows. See updates at bottom of this post for Airbyte submission status. Until then, test and sync to check out the initial capabilities.

Longer version: This connector is in development on my personal git. Until its pushed to Airbyte's catalog and hosted by them in their git, usage of the connector from my git constitutes forking your own copy/snapshot. I encourage people to use the connector as needed, test it, customize it, and help develop it (reach out if you're interested, I have a few to-do's!) — but just know that downstream updates, fixes, and enhancements to the connector won't be available as auto-upgrades in airbyte until officially hosted in their catalog. Until then, you'll want to manually re-deploy this custom connector to stay up to date with bug fixes and enhancements.

*update 9/15: connector submission to Airbyte for 'no code' 1-click install from their catalog is in progress. You can track the status of the PR here:

Answers

-

Thank you for sharing.

Can you give a breakdown on "why Airbyte" vs Power Automate?

0 -

Sure! I respect the work being done with power automate - the official Blackbaud connectors and all the creative flows developed and shared by the community are fantastic for workflow automation. From my perspective, I would actually argue that power automate and airbyte are complementary tools for solving different problems.

TL;DR: I see Airbyte best for moving the data, while Power Automate is best for creating workflows from the data.

I think power automate excels at:

-Workflow automation (gift acknowledgement, notifications, action creation, etc.)

-Event-driven processes for human interaction

I think Airbyte is probably a better choice for:

-High-volume data handling (millions of records)

-'warehouse loading' (Snowflake, BigQuery, etc.)

-Complex transformations and CDC (if necessary - haven't really gone down that road yet but the tools look juicy sweet)I like how Power Automate handles scenarios like "when a gift comes in, trigger an acknowledgment."

However, I strongly prefer Airbyte for a job like "sync our entire donor database to the data warehouse for analytics."

I'd be interested to hear about your Power Automate implementations for data movement/mirroring though. Perhaps you've got more efficient / less complex setups than what I've experienced.

My inspiration for creating this Airbyte connector came from my experience with the Power Automate setup I inherited. The architecture involves Power Automate flows exporting query data as CSVs to SharePoint, which then supplements data gaps from the Power BI connector - all requiring a gateway VM just to maintain scheduled refreshes for dashboards. That's a lot of moving parts just to join data and keep reports fresh in Power BI (which has its own adoption challenges internally, but that's a totally separate issue). While this might work for some scenarios, for me, Airbyte simplifies it to a single pipeline directly to our data warehouse.0 -

Thanks for the information on Airbyte, I have some additional question at the end after I provide some answer to your question on Power Automate.

Yes, the way you are "exposed" to Power platform's data management is not the best. However, that's how a lot of people started. It has a lower requirement for technical understanding, but not efficient on mass data pull.

Here's what I do:

- Azure SQL Database as data warehouse

- Power Automate flows (I have 2 dozens or so of flow, each doing different data record pull), for example:

- Gift tables (one flow does a few different tables: gift, gift split, gift soft credit, gift fundraiser, pledge installment, pledge payment)

- this is done though a few API endpoints: gift list, get installments, get payment, and query API for write off (b/c write off gift type do not show in gift list).

- Azure SQL has a OPENJSON subroutine that easily process 5000 records of JSON data that easily insert in few seconds.

- All my data sync flows run every 4 hours. This is not possible to do for some tables (i.e. gift table having over 2M+, so every 4 hours is iterative pull meaning only changed records, I do a FULL gift data sync midnight, nightly, which takes 2 hours)

- The question a lot have is why do a full gift sync. This has to do with a problem on when Blackbaud thinks a gift has been changed.

- Imagine you have a gift, direct credit to A, soft credit to B. B is merged into C and B is deleted. soft credit is now to C on the gift, but this gift is not consider "changed". In the data warehouse, this gift will still be soft credit to B's constituent system id, even though B no longer exists

- The question a lot have is why do a full gift sync. This has to do with a problem on when Blackbaud thinks a gift has been changed.

- NOTE: as my data sync is mostly independence of one another, I do not have foreign key constraint

- From there, my Power BI connects to Azure data warehouse. Azure data warehouse also have VIEW created that join commonly used table with our business logic. This way I also save on some complex joining need from Power Query

The only big issue I see with Power Automate is its 2 minutes limitation on an action. For example, for table that have more than 5000 records (SKY API pull limit per call is 5000 on most), I make 1 call, OPENJSON to insert into a staging table first, once done with all records, I TRUNCATE the main table, and insert from staging into main table. However, the insert can take a long time when there is a LOT of record rows, when over 2 minutes, Power Automate will timeout on the INSERT. What I have to do is break up the insert by MOD X on the ID. Does Airbyte have this limitation?

1 -

Thanks for sharing! Yes, I think we're in agreement here - your setup isn't 'beginner friendly' in that sense. But in practicality, you achieve the same end result. So no, you don't 'need' the airbyte connector. However…

About your question: airbyte handles this! This is exactly where airbyte excels. In my current connector configuration, we use pagination to divide records into individual API calls, 100 at a time. Short calls, managed tracking **For large calls, the records/page should be updated to a much larger number. I made the mistake of attempting a full constituent sync at 100/page: my quota was reached after pulling 2,500,000 records (2.5 million / 100 records per page which is a single api call = 25,000 api calls).

I don't know what the appropriate records per page is (sounds like you're saying up to 5,000? In this case, we can configure a lot more records per call to make this much more efficient — trying this now!)

But in short, no time-based max, and with pagination, it automatically handles chunking the call according to your settings.**with respect to managed tracking: right now, it works fine as I've built it - but it still needs improvement. Here's the situation according to my understanding

When using incremental sync with last_modified parameter on SKY API endpoints, the API prevents duplicate records by adding a sort_token parameter to the next_link URL.

However, this token is embedded in the URL string, not returned as a separate field.The problem:

- Constituents endpoint: Returns sort_token inside the next_link URL (e.g.,

https://api.sky.blackbaud.com/constituent/v1/constituents?limit=100&offset=200&sort_token=abc123) - Actions endpoint: Returns continuation_token as a direct response field that can be easily extracted and used

Why it matters:

- Without using sort_token, offset-based pagination with last_modified causes duplicate records (as records modified during pagination can appear multiple times)

- Airbyte's declarative connector can easily handle continuation_token (direct field) but I still need to figure out how to extract sort_token from a URL string

Current workaround:

Using Airbyte's built-in deduplication, but this doesn't solve the root cause and still processes unnecessary duplicate records during sync.The question for the community:

How can we extract and use the sort_token from the next_link URL in a declarative Airbyte connector, similar to how continuation_token works for the actions stream?0 - Constituents endpoint: Returns sort_token inside the next_link URL (e.g.,

-

@Alex Wong your comment about 5k records/call just saved me a lot of trouble. 4,000/page is stable. Initial sync going at pace of ~3 million records/hour.

0 -

most API calls have limit=5000, the only one I'm aware of that is more is Event List where the limit is 10K, that's what I always use, the max limit.

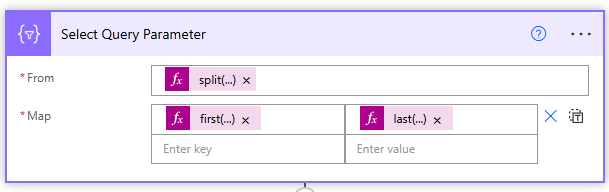

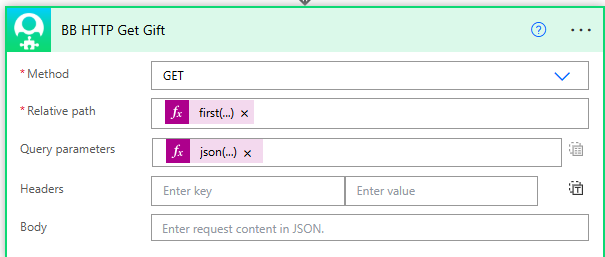

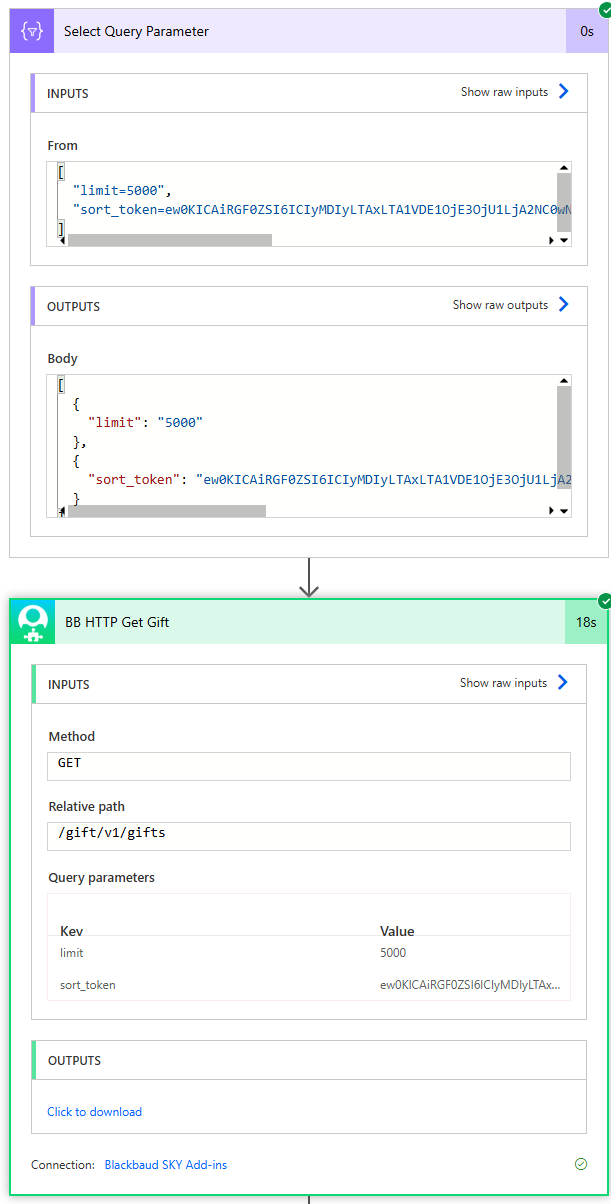

In power automate, the way I handle next link is:

From:

split(last(split(variables('Next Link'), '?')), '&')Map:

split(last(split(variables('Next Link'), '?')), '&')andlast(split(item(), '='))then my HTTP call:

Relative path:

first(split(replace(variables('Next Link'), 'https://api.sky.blackbaud.com', ''), '?'))Query parameters:

json(replace(join(body('Select_Query_Parameter'), ','), '},{', ','))1 -

Here's what a run of these 2 actions looks like:

1 -

@Alex Wong awesome - thanks so much! This set me on the right path, and I figured it out. Resolved the issue AND resolved my other warnings I was getting about unique ID's. With this fix for the sort token and recent error handling, i can now submit this to Airbyte for placement in their catalog … simplifies installation greatly, and opens it up to providing downstream updates to the connector so people don't need to fork it and run their own copy.

0 -

Another note to consider:

sort_token does not means you won't get duplicate. Say for example, you are sync 1M records of gift, it can take up to 1hr. AFTER you started the process of loading 5000 at a time, if a gift is changed, that gift may show up a 2nd time at the tail end of the calls. You need to process "MERGE" (SQL language speaking) if duplicate is found and use the "later" showed up data for the gift, not the earlier.

1 -

@Alex Wong Imagine you have a gift : direct credit to A, soft credit to B. B is then merged into C and deleted. The soft credit now points to C on the gift, but this gift is not considered "changed." In the data warehouse, that gift will still show as soft credited to B’s constituent system ID, even though B no longer exists.

When I pull all recently changed gifts, I ran into this issue too. To work around it, after pulling all recently changed gifts, I then run a gift query with only the following columns:

Soft Credit Recipient ID, System ID.I load the data into a table calledsoft_credit_check_tbl. Then, I check whichSystem IDhas aSoft Credit Recipient IDthat has changed. I collect all the ones that have changed into a list, and if needed added to an ad-hoc query which can be used to appended to the recently changed gifts output0 -

this method works for soft credit, but you have to worry about other "lingering" issue too (i.e. gift solicitor).

1

Categories

- All Categories

- 6 Blackbaud Community Help

- 213 bbcon®

- 1.4K Blackbaud Altru®

- 403 Blackbaud Award Management™ and Blackbaud Stewardship Management™

- 1.1K Blackbaud CRM™ and Blackbaud Internet Solutions™

- 15 donorCentrics®

- 360 Blackbaud eTapestry®

- 2.6K Blackbaud Financial Edge NXT®

- 656 Blackbaud Grantmaking™

- 576 Blackbaud Education Management Solutions for Higher Education

- 3.2K Blackbaud Education Management Solutions for K-12 Schools

- 939 Blackbaud Luminate Online® and Blackbaud TeamRaiser®

- 84 JustGiving® from Blackbaud®

- 6.6K Blackbaud Raiser's Edge NXT®

- 3.7K SKY Developer

- 248 ResearchPoint™

- 119 Blackbaud Tuition Management™

- 165 Organizational Best Practices

- 241 Member Lounge (Just for Fun)

- 34 Blackbaud Community Challenges

- 34 PowerUp Challenges

- 3 (Open) PowerUp Challenge: Chat for Blackbaud AI

- 3 (Closed) PowerUp Challenge: Data Health

- 3 (Closed) Raiser's Edge NXT PowerUp Challenge: Product Update Briefing

- 3 (Closed) Raiser's Edge NXT PowerUp Challenge: Standard Reports+

- 3 (Closed) Raiser's Edge NXT PowerUp Challenge: Email Marketing

- 3 (Closed) Raiser's Edge NXT PowerUp Challenge: Gift Management

- 4 (Closed) Raiser's Edge NXT PowerUp Challenge: Event Management

- 3 (Closed) Raiser's Edge NXT PowerUp Challenge: Home Page

- 4 (Closed) Raiser's Edge NXT PowerUp Challenge: Standard Reports

- 4 (Closed) Raiser's Edge NXT PowerUp Challenge: Query

- 793 Community News

- 2.9K Jobs Board

- 54 Blackbaud SKY® Reporting Announcements

- 47 Blackbaud CRM Higher Ed Product Advisory Group (HE PAG)

- 19 Blackbaud CRM Product Advisory Group (BBCRM PAG)